本篇内容介绍了“怎么联合使用Spark Streaming、Broadcast、Accumulaor”的有关知识,在实际案例的操作过程中,不少人都会遇到这样的困境,接下来就让小编带领大家学习一下如何处理这些情况吧!希望大家仔细阅读,能够学有所成!

广播可以自定义,通过Broadcast、Accumulator联合可以完成复杂的业务逻辑。

以下代码实现在本机9999端口监听,并向连接上的客户端发送单词,其中包含黑名单的单词Hadoop,Mahout和Hive。

|

package org.scala.opt

import java.io.{PrintWriter, IOException}

import java.net.{Socket, SocketException, ServerSocket}

case class ServerThread(socket : Socket) extends Thread("ServerThread") {

override def run(): Unit = {

val ptWriter = new PrintWriter(socket.getOutputStream)

try {

var count = 0

var totalCount = 0

var isThreadRunning : Boolean = true

val batchCount = 1

val words = List("Java Scala C C++ C# Python JavaScript",

"Hadoop Spark Ngix MFC Net Mahout Hive")

while (isThreadRunning) {

words.foreach(ptWriter.println)

count += 1

if (count >= batchCount) {

totalCount += count

count = 0

println("batch " + batchCount + " totalCount => " + totalCount)

Thread.sleep(1000)

}

//out.println此类中的方法不会抛出 I/O 异常,尽管其某些构造方法可能抛出异常。客户端可能会查询调用 checkError() 是否出现错误。

if(ptWriter.checkError()) {

isThreadRunning = false

println("ptWriter error then close socket")

}

}

}

catch {

case e : SocketException =>

println("SocketException : ", e)

case e : IOException =>

e.printStackTrace();

} finally {

if (ptWriter != null) ptWriter.close()

println("Client " + socket.getInetAddress + " disconnected")

if (socket != null) socket.close()

}

println(Thread.currentThread().getName + " Exit")

}

}

object SocketServer {

def main(args : Array[String]) : Unit = {

try {

val listener = new ServerSocket(9999)

println("Server is started, waiting for client connect...")

while (true) {

val socket = listener.accept()

println("Client : " + socket.getLocalAddress + " connected")

new ServerThread(socket).start()

}

listener.close()

}

catch {

case e: IOException =>

System.err.println("Could not listen on port: 9999.")

System.exit(-1)

}

}

}

|

以下代码实现接收本机9999端口发送的单词,统计黑名单出现的次数的功能。

|

package com.dt.spark.streaming_scala

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, Accumulator}

import org.apache.spark.broadcast.Broadcast

/**

* 第103课: 动手实战联合使用Spark Streaming、Broadcast、Accumulator实现在线黑名单过滤和计数

* 本期内容:

1,Spark Streaming与Broadcast、Accumulator联合

2,在线黑名单过滤和计算实战

*/

object _103SparkStreamingBroadcastAccumulator {

@volatile private var broadcastList : Broadcast[List[String]] = null

@volatile private var accumulator : Accumulator[Int] = null

def main(args : Array[String]) : Unit = {

val conf = new SparkConf().setMaster("local[5]").setAppName("_103SparkStreamingBroadcastAccumulator")

val ssc = new StreamingContext(conf, Seconds(5))

ssc.sparkContext.setLogLevel("WARN")

/**

* 使用Broadcast广播黑名单到每个Executor中

*/

broadcastList = ssc.sparkContext.broadcast(Array("Hadoop", "Mahout", "Hive").toList)

/**

* 全局计数器,用于通知在线过滤了多少各黑名单

*/

accumulator = ssc.sparkContext.accumulator(0, "OnlineBlackListCounter")

ssc.socketTextStream("localhost", 9999).flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_).foreachRDD {rdd =>{

if (!rdd.isEmpty()) {

rdd.filter(wordPair => {

if (broadcastList.value.contains(wordPair._1)) {

println("BlackList word %s appeared".formatted(wordPair._1))

accumulator.add(wordPair._2)

false

} else {

true

}

}).collect()

println("BlackList appeared : %d times".format(accumulator.value))

}

}}

ssc.start()

ssc.awaitTermination()

ssc.stop()

}

}

|

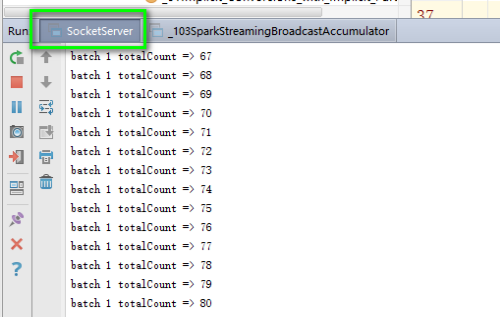

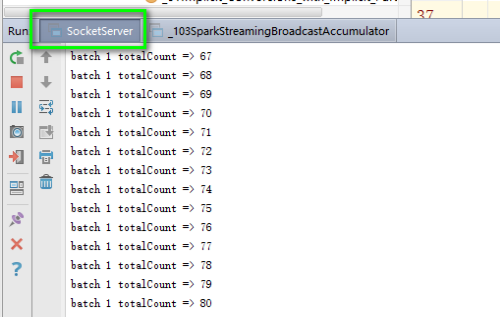

Server发送端日志如下,不断打印输出的次数。

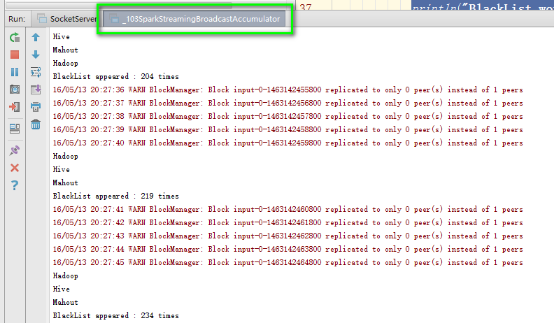

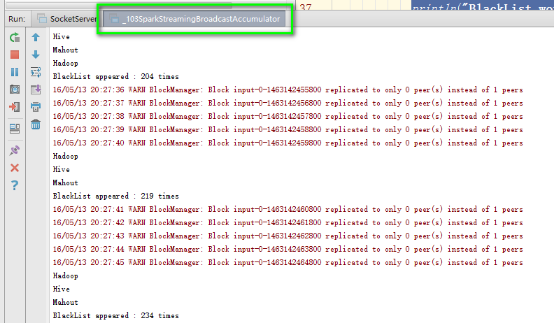

Spark Streaming端打印黑名单的单词及出现的次数。

“怎么联合使用Spark Streaming、Broadcast、Accumulaor”的内容就介绍到这里了,感谢大家的阅读。如果想了解更多行业相关的知识可以关注蜗牛博客网站,小编将为大家输出更多高质量的实用文章!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:niceseo99@gmail.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。

评论